A major data exposure incident has been uncovered involving the iOS app FlirtAI – Get Rizz & Dates, which leaked over 160,000 private chat screenshots through misconfigured cloud storage. The breach stemmed from an unsecured Google Cloud Storage bucket belonging to Buddy Network GmbH, the Berlin-based developer behind the app.

FlirtAI markets itself as an AI-powered wingman, allowing users—many of them teenagers—to upload screenshots of chat conversations and dating profiles in exchange for tailored message suggestions. However, in doing so, the app unwittingly exposed sensitive data that users had submitted for analysis, much of which belonged to people who never consented to having their conversations shared.

“Due to the nature of the app, people most affected by the leak may be unaware that screenshots of their conversations even exist, let alone that they could be leaked on the internet,” noted the research team, who discovered the leak.

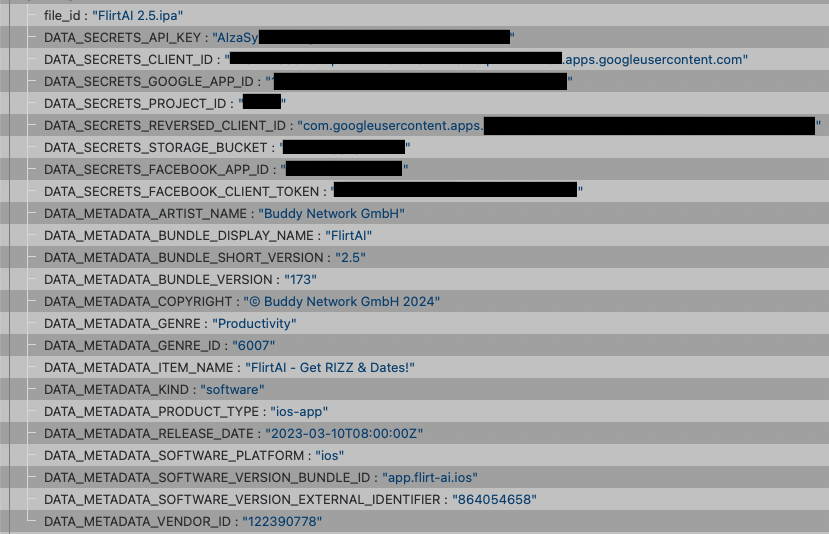

Sample of the leaked data by Cybernews.

What Data Was Exposed and Why It Matters

The leaked dataset primarily includes screenshots from messaging apps and dating platforms. These images were submitted by FlirtAI users looking for help crafting responses in personal or romantic conversations. While the app requested users obtain consent before uploading screenshots, this expectation was clearly unrealistic—especially for its largely teenage user base.

Many of the screenshots contained identifying information about the other person in the conversation, not necessarily the user of the app. According to Cybernews, chat app UI designs often make it easier to identify the conversation partner than the user themselves. This raises significant concerns, as most of these individuals likely had no idea their chats had been uploaded to a third-party AI service, let alone exposed publicly.

FlirtAI encourages users to “Snap a screenshot of your match’s profile or chat… and let the AI work its magic,” delivering five suggested replies based on the uploaded content. But that “magic” now carries a privacy cost—particularly for minors.

“The fact that teenagers used this app may increase the severity of a potential data breach,” the researchers emphasized, adding that data belonging to minors is more sensitive and subject to stricter data protection regulations.

Risks to Mental Health and Data Protection Violations

The implications of this breach extend beyond digital privacy. FlirtAI’s user base likely includes individuals dealing with self-esteem challenges, social anxiety, or dating inexperience. Having their private chats or profile interactions leaked—even unknowingly—can lead to emotional distress, embarrassment, or harassment.

The app has an age rating of 17+ due to “mature/suggestive themes” and “crude humor,” but this did not deter younger users. As a company registered in Germany, Buddy Network GmbH is subject to GDPR, one of the world’s strictest data protection laws. The exposure of data—especially from minors—may bring further regulatory scrutiny.

Buddy Network GmbH has other AI-focused products on the App Store, including “Angel – Talk to Me at Any Time” and “90 Seconds – Your AI Journal.” All apps involve user-generated content that could pose similar risks if mishandled.

iOS Apps and a Pattern of Weak Security

This incident is the latest in a growing trend of privacy failures by mobile apps. The Cybernews team has previously reported on numerous iOS applications leaking private photos, messages, or even credentials. Their large-scale investigation revealed that of 156,000 iOS apps reviewed, 71% exposed at least one secret, often due to hardcoded credentials or misconfigured storage.

Apps in sensitive niches—such as dating, private messaging, or mental health—have been among the worst offenders. Some LGBTQ+, BDSM, and sugar dating platforms were found to leak users’ private photos, often those shared in supposedly secure private conversations.

With AI integration becoming more widespread across consumer apps, this breach serves as a stark reminder of the hidden trade-offs between convenience and security. Users entrust these services with intimate parts of their lives, often without fully understanding where that data is going or how it’s stored.

Lessons for Enterprises and Security Professionals

Incidents like this highlight the urgent need for robust cloud security, user data anonymization, and stronger compliance with data protection laws—especially for companies working with AI. Organizations handling user-submitted content must implement strict access controls, regular vulnerability testing, and real-time threat monitoring.

For enterprise teams managing sensitive internal applications or consumer platforms with user-generated content, a single storage misconfiguration can lead to irreversible damage to brand trust and regulatory consequences.

One key step toward preparedness is ensuring critical data has an immutable, air-gapped backup that can’t be compromised even if primary systems are breached.

Looking for a trusted recovery solution?

Defend your organization with StoneFly DR365—an air-gapped, immutable backup and recovery appliance trusted by enterprises to ensure zero data loss even in the event of complex cyberattacks.